I’m Kyle.

Having developed a high-level mastery of retouching and photography for brand campaigns, I am now focused on integrating AI into creative workflows.

I’m eager to leverage these new technologies to enhance efficiency and push the boundaries of visual storytelling.

I have compiled this page to showcase some of my recent work and express my keen interest in expanding my involvement in the entertainment industry through my talents. My experience with Netflix as a contractor introduced me to the fascinating world of key art finishing. Collaborating with designers and art directors to create exceptionally creative work has been immensely fulfilling, and I am eager to pursue more opportunities in this area.

As a Senior Retoucher with extensive experience in the advertising sector, I have worked with top brands and agencies, including Nike, Adidas, Wieden+Kennedy, Meta, Google, and many more. My skills in retouching, photography, composition, design, and motion combine to offer a unique perspective on the most effective approaches to creative projects.

This page contains work from a series of tests exploring the potential of new tools like generative AI and their current capabilities and limitations. The work is only as good as the assets that were used to make it, in this case is a mixed bag based on AI capabilities and limitations. I speak directly to my findings about the capabilities of generative AI and plan to keep refinish this skillset as I do believe for better or worse that it will become a very important part of the creative process in all industries.

I invite you to review my work and consider how we might collaborate. I am enthusiastic about the possibility of working together and would greatly appreciate the opportunity to discuss this further. Please feel free to contact me at your earliest convenience.

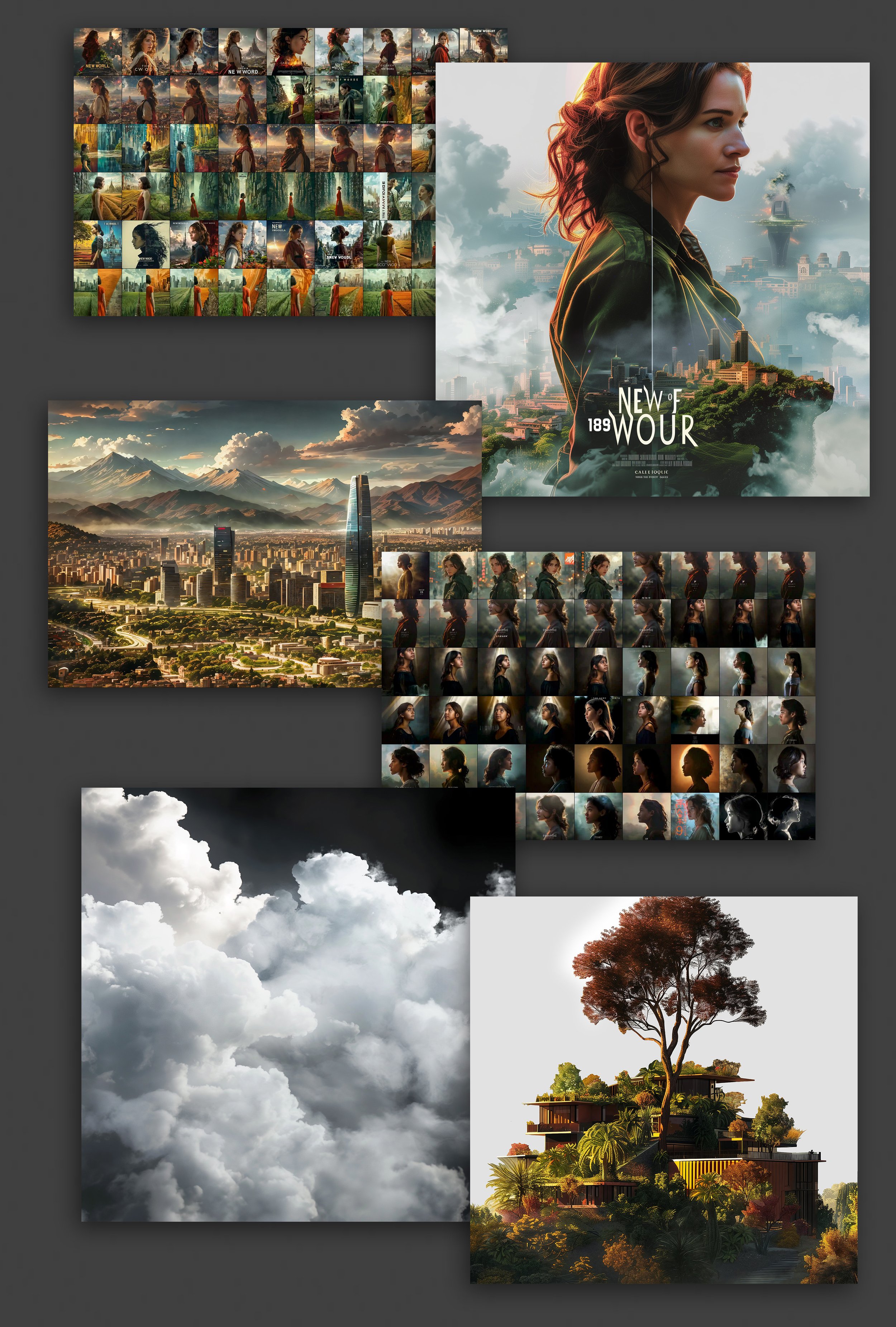

I am currently developing an ongoing experiMEntal series of sci-fi key art solely using Generative AI, as a means to explore the capabilities of these cutting-edge tools.

“New World” Key Art Comp 03

Here we are with Round Three of creating Key Art from scratch using only generative AI. This time, we focus heavily on Style Transfer as performed by Magnific AI.

To showcase what style transfer can accomplish, I chose to create a composition that displays one city skyline in many different styles, all within one composition. As always, I explain both the positive and negative aspects graphically and ethically.

The tools used in this project include:

Magnific Style Transfer: Used to create several images of the same content with different image styles applied. In this case, one city skyline rendered in four different styles.

Magnific Upscale: Style Transfer outputs low-resolution imagery. Upscale was used to increase the resolution and introduce new detail and content into the image simultaneously.

Midjourney: Utilized for image generation of source imagery.

Photoshop: This composition relies much more on traditional Photoshop work than the previous two compositions. The entire fragmented layout was created by hand in Photoshop.

After Effects: After completing the work in Photoshop, we bring our layered PSD into AE to animate. Good old fashioned Motion Design.

Lessons Learned with Style Transfer

I began with the Midjourney-generated green and peaceful futuristic city of Santiago, Chile. From that single image, I transferred three other styles onto it: a burning calamity, a car-centric city of modern-day, and a digital metaverse city from New World.

Magnific offers the following controls:

Input Image: This image serves as the content matter and structure for the style transfer. In this case, it's a clear image of a city skyline that forms the base for our various styles.

Reference Image: While this can be any image, it's helpful if it's similar in content to the Input Image. However, using Google image search for reference images can lead to ethical concerns. I have been using images from a stock library and my own body of work as reference images and for the most part have had myself covered for what I have needed.

Style Strength: This control determines how much of the reference image style is reflected in the output.

Structure Strength: This control determines how much of the input image style is retained in the output.

Other Controls: Magnific offers different render engines that may be suitable for various purposes, such as illustrated art or 3D-style outputs. However, experimenting with these settings can be frustrating, and I've found that the default settings often produce better results when trying to produce somewhat realistic imagery.

Prompt: Since my original image was an AI generated image I entered the same prompt that was used to generate to original image. That seems to provide good results for realism. You can type any prompt you like but when you request specifics quality level tends to go off the rails.

Process

Using Magnific AI Style Transfer, begin with the source image of the city.

To create my new styles, I relied on images from a stock library to which I hold a subscription. I found images that resembled the scene I was looking for. Is this an ethical way to use stock images? I would argue yes, since I pay for the privilege of accessing those images.

Style Transfer is still in beta and only outputs low-resolution images. However, Magnific Upscale excels in this regard. Once you understand how to adjust the settings appropriately, Magnific Upscale does a remarkable job of creating new data and textures to fill in the gaps.

In some cases, I even down-sized the Style Transfer results further so that Upscale AI could hallucinate even more detail, often resulting in better outcomes.

Conclusions

This project has prompted me to reconsider my relationship with image resolution, which is always at the forefront of any retoucher's mind, especially when clients provide less-than-ideal source material.

While it's not quite there yet, I can envision AI software like this solving many image resolution problems in the future. Like all things AI, it tends to produce results resembling concept art, but it does an impressive job, especially on images that are high-fidelity to begin with.

Original on the left, low res style transfer to night on the right.

Low res style transfer on the left, high res upscale with creative hallucinations on.

“New World” Key Art Comp 02

Here we are with Round Two of creating Key Art from scratch using only generative AI. This time we get into character development, wardrobe, ethics, and ways to manipulate AI to get what you want.

The AI tools used in this project include:

Midjourney: For image generation, layout exploration, and the creation of detailed plates to build the scene.

Magnific: To enhance the resolution of Midjourney's output and to style transfer images.

ChatGPT4: For writing prompts for both Midjourney and Magnific, with no manual prompt writing involved.

Click Here for LinkedIn article on the creation of this artwork

Consistent Style in Character Development

To produce the character assets, I employed a few techniques to ensure that each character possessed a distinct appearance while maintaining a consistent style, lighting, and wardrobe.

I tasked ChatGPT with generating personas by providing a list of essential details about each character.

I selected a reference image that captured the desired lighting, style, and wardrobe.

I then inputted the personas, along with the reference image, into Midjourney to develop unique characters that all shared the same lighting and wardrobe.

I started with developing the main character Camila then used her image as a reference to generate the rest. There's nothing to prevent me from referencing other artists' work for this purpose such as referencing them in a prompt or even inputting their work. I conducted this experiment to explore the potential outcomes, and I discovered that I could effortlessly transfer styles to anything I desired, using any artist's work as a reference.

The ethical considerations are complex. I think directly training AI on an artist’s work is unethical and should be avoided. I also recognize that that nature of generative AI is derivative and we are unlikely able to go back. With the capability at their disposal, it's inevitable that people will utilize it; the convenience is too compelling.

Like it or not, creating anything these days requires acknowledging that the creation is now part of the training data, and that's just the way it is.

Using ChatGPT4 to Write Midjourney Prompts

Another really effective method for getting a consistent style across multiple images is to use chatGPT to edit and alter prompts for you.

In the example I give chatGPT the prompt I used to generate the image of the main character. Now I want the prompt to be exactly the same except I want it to describe a different character. ChatGPT can go in and change just the details you want changed.

I utilized a consistent prompt as the foundation to generate all the characters, employing the same reference image as a baseline. To distinguish each character uniquely, I had chatGPT strategically altered key descriptors within the prompt, tailoring the details to encapsulate the individual essence and attributes of each figure.

New World Key Art Comp 01

This New World Key Art is a project meant to allow me to explore the current state of generative AI and how effective it can be for real world work, or if the technology needs more time to develop.

The AI tools used in this project include:

Midjourney: For image generation, layout exploration, and the creation of detailed plates to build the scene.

Magnific: To enhance the resolution of Midjourney's output.

ChatGPT: For writing prompts for both Midjourney and Magnific, with no manual prompt writing involved.

Click Here for A detailed article on the creation of this artwork

What AI Is Currently Good At:

Brainstorming and Concepting: I have found that using Midjourney as a very blunt tool to develop concepts and layouts is very useful. It will only get you 51% of the way there though. You must be able to see through to the vision of what you want to create.

Source Assets: Generative AI is also very useful for creating raw assets that you can use in a composition. This is all the stuff that I would normally go to a stock website for. Smoke, clouds, skies, foliage all output pretty well and can be used as supporting assets in compositions.

People and Faces: In my testing I have found that Midjourney is surprisingly good at generating photorealistic people if you know how to prompt it properly. This is likely because it has so many faces to reference in its training.

What AI Is Currently Terrible At:

Photo Realistic Environmemnts: AI can create incredible environments but they tend to look like concept art no matter how you prompt them. I ran into this issue with this project and was forced to blur out the background plates enough to hide the low quality.

Specifics: The main reason why AI is not ready for client and public facing work is that it is by nature a generalist. Remember that its knowledge is based on what has already been done. If you are creating something worthwhile chances are you are doing something new. This is why I am using AI more as a creator of assets rather than an out-putter of finished work.

Quality: Let’s face it, the quality you can get out of AI isn’t the best, mostly because we are limited to low res outputs. I have been playing with Magnific.ai recently and it does a surprisingly good job up rez’ing up images and adding in new details as it does. Would be nice to have higher res source assets from the start though.